By Rasha Abdul Rahim, Advocate/Adviser on Arms Control, Security Trade & Human Rights at Amnesty International

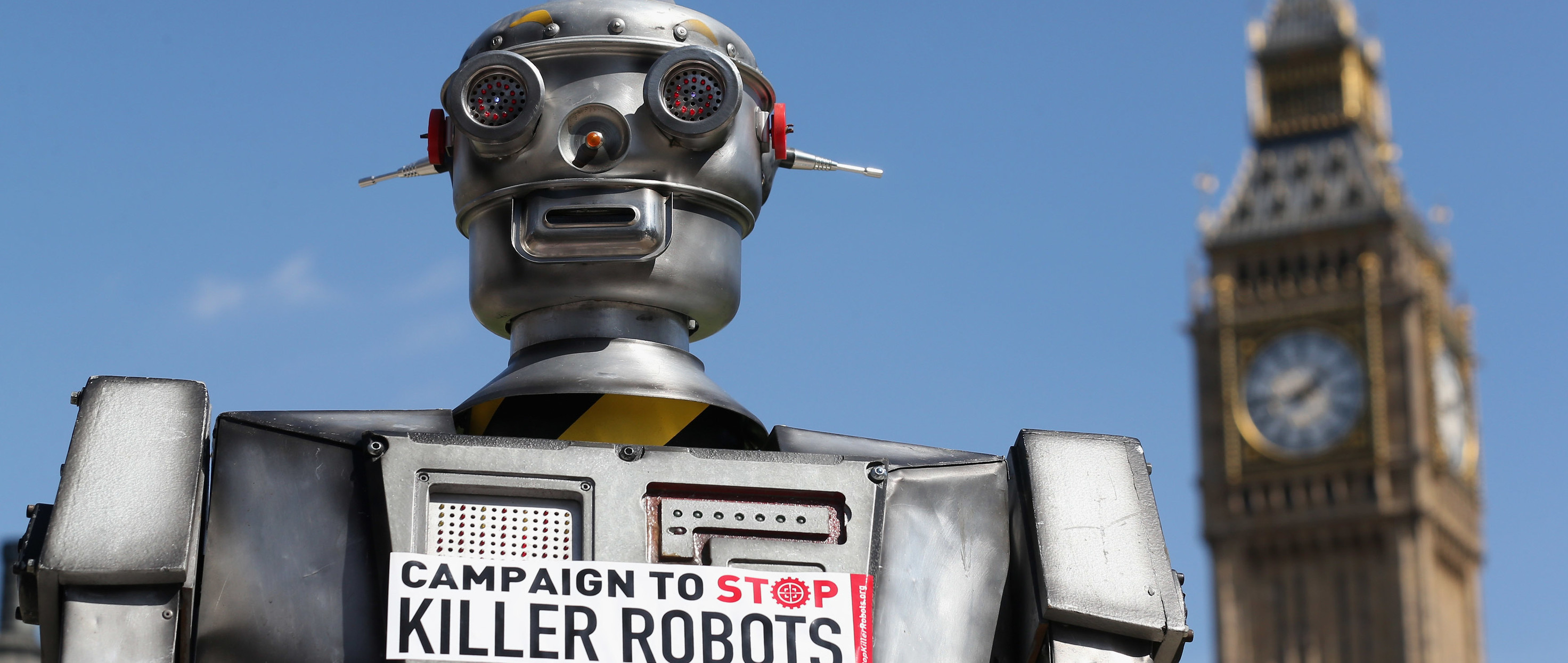

Governments are meeting today in Geneva to discuss what to do about “Killer Robots”. Amnesty International is calling for the creation of a formal negotiation process with a view to establishing a new global ban on lethal and less-lethal “Killer Robots”, both on the battlefield and in policing operations. Here are 10 reasons why such a ban is essential.

1. “Killer Robots” will not be a thing of science fiction for long

Killer robots are weapons systems which, once activated, can select, attack, kill and injure human targets without a person in control. Once the stuff of dystopian science fiction, these weapons – also known as “fully autonomous weapon systems” (AWS) – will soon become fact.

Many precursors to this technology already exist. The Vanguard Defense Industries’ ShadowHawk drone, for example, can be armed with a grenade launcher, a shotgun with laser designator, or less-lethal weapons such as a Taser or bean-bag round launcher. In 2011 the office of the Sheriff in Montgomery County, Texas, purchased an unarmed ShadowHawk with a grant from the Department of Homeland Security. In August this year North Dakota became the first US state to legalize the use of drones that can be used remotely to incapacitate people with high-voltage electric-shocks.

2. They will be openly used for repression by unaccountable governments

Some governments argue that “Killer Robots” could reduce the risks of deploying soldiers to the battlefield, or police on dangerous law enforcement operations. Their use would therefore make it easier for governments to enter new armed conflicts and use force in, for example, policing of protests. Though soldiers and police might be safer, this lowered threshold could lead to more conflict and use of force and, consequently, more risk to civilians.

Proponents of “Killer Robots” also argue that their lack of emotion would eliminate negative human qualities such as fear, vengeance, rage and human error. However, human emotions can sometimes act as an important check on killing or injuring civilians, and robots could easily be programmed to carry out indiscriminate or arbitrary attacks on humans, even on a mass scale. “Killer Robots” would be incapable of refusing orders, which at times can save lives. For example, during mass protests in Egypt in January 2011, the army refused to fire on protesters, an action that required innate human compassion and respect for the rule of law.

3. They would not comply with human rights law and international policing standards

International policing standards prohibit the use of firearms except in defence against an imminent threat of death or serious injury, and force can only be used to the minimum extent necessary. It is very difficult to imagine a machine substituting human judgment where there is an immediate and direct risk that a person is about to kill another person, and then using appropriate force to the minimum extent necessary to stop the attack. Yet such a judgement is critically important to any decision by an officer to use a weapon. In most situations police are required by UN standards to first use non-violent means, such as persuasion, negotiation and de-escalation, before resorting to any form of force.

Effective policing is much more than just using force; it requires the uniquely human skills of empathy and negation, and an ability to assess and respond to often dynamic and unpredictable situations. These skills cannot be boiled down to mere algorithms. They require assessments of ever-evolving situations and of how best to lawfully protect the right to life and physical integrity that machines are simply incapable of. Decisions by law enforcement officers to use minimum force in specific situations require direct human judgement about the nature of the threat and meaningful control over any weapon. Put simply, such life and death decisions must never be delegated to machines.

4. They would not comply with the rules of war

Distinction, proportionality and precaution are the three pillars of international humanitarian law, the laws of war. Armed forces must distinguish between combatants and non-combatants; civilian causalities and damage to civilian buildings must not be excessive in relation to the expected military gain; and all sides must take reasonable precautions to protect civilians.

All of this, clearly, requires human judgement. Robots lack the ability to analyze the intentions behind people’s actions, or make complex decisions about the proportionality or necessity of an attack. Not to mention the need for compassion and empathy for civilians caught up in war.

5. There would be a huge accountability gap for their use

If a robot did act unlawfully how could it be brought to justice? Those involved in its programming, manufacture and deployment, as well as superior officers and political leaders could be held accountable. However, it would be impossible for any of these actors to reasonably foresee how a “Killer Robot” would react in any given circumstance, potentially creating an accountability vacuum.

Already, investigations into unlawful killings through drone strikes are rare, and accountability even rarer. In its report on US drone strikes in Pakistan, Amnesty International exposed the secrecy surrounding the US administration’s use of drones to kill people and its refusal to explain the international legal basis for individual attacks, raising concerns that strikes in Pakistani Tribal Areas may have also violated human rights.

Ensuring accountability for drone strikes has proven difficult enough, but with the extra layer of distance in both the targeting and killing decisions that “killer robots” would involve, we are only likely to see an increase in unlawful killings and injuries, both on the battlefield and in policing operations.

6. The development of “Killer Robots” will spark another arms race

China, Israel, Russia, South Korea, the UK, and the USA, are among several states currently developing systems to give machines greater autonomy in combat. Companies in a number of countries have already developed semi-autonomous robotic weapons which can fire tear gas, rubber bullets and electric-shock stun darts in law enforcement operations.

The past history of weapons development suggests it is only a matter of time before this could spark another hi-tech arms race, with states seeking to develop and acquire these systems, causing them to proliferate widely. They would end up in the arsenals of unscrupulous governments and eventually in the hands of non-state actors, including armed opposition groups and criminal gangs.

7. Allowing machines to kill or use force is an assault on human dignity

Allowing robots to have power over life-and-death decisions crosses a fundamental moral line. They lack emotion, empathy and compassion, and their use would violate the human rights to life and dignity. Using machines to kill humans is the ultimate indignity.

8. If “Killer Robots” are ever deployed, it would be near impossible to stop them

As the increasing and unchecked use of drones has demonstrated, once weapons systems enter into use, it is incredibly difficult or near impossible to regulate or even curb their use.

The “Drone Papers” recently published by The Intercept, if confirmed, paint an alarming picture of the lethal US drones program. According to the documents, during one five-month stretch, 90% of people killed by US drone strikes were unintended targets, underscoring the US administration’s long-standing failure to bring transparency to the drones program.

It appears too late to abolish the use of weaponized drones, yet their use must be drastically restricted to save civilian lives. “Killer Robots” would greatly amplify the risk of unlawful killings. That is why such robots must be preemptively banned. Taking a ‘wait and see’ approach could lead to further investment in the development and rapid proliferation of these systems.

9. Thousands of robotics experts have called for “Killer Robots” to be banned

In July 2015, some of the world’s leading artificial intelligence researchers, scientists, and related professionals signed an open letter calling for an outright ban on “Killer Robots”.

So far, the letter has gathered 2,587 signatures, including more than 14 current and past presidents of artificial intelligence and robotics organizations and professional associations. Notable signatories include Google DeepMind chief executive Demis Hassabis, Tesla CEO Elon Musk, Apple co-founder Steve Wozniak, Skype co-founder Jaan Tallin, and Professor Stephen Hawking.

If thousands of scientific and legal experts are so concerned about the development and potential use of “Killer Robots” and agree with the Campaign to Stop Killer Robots that they need to be banned, what are governments waiting for?

10. There has been a lot of talk but little action in two years

Ever since the problems posed by “Killer Robots” were first brought to light in April 2013, the only substantial international discussions on this issue have been two weeklong informal experts’ meetings in Geneva at the conference on the UN Convention on Certain Conventional Weapons (CCW). It is ludicrous that so little time has been devoted to so serious a risk, and so far little progress has been made.

For Amnesty International and its partners in the Campaign to Stop Killer Robots, a total ban on the development, deployment and use of lethal autonomous weapon systems is the only real solution.

The world can’t wait any longer to take action against such a serious global threat. It’s time to get serious about banning Killer Robots once and for all.

As an Artificial Intelligence scientist and entrepreneur I believe that A.I. has a lot to bring to mankind, but we see that the risks of misuage are now extremely high – at the level of the huge potential benefits.

Killer robots are on their way. Leaving this inititative to a tiny group serving miltary or/and corporate interests is not the way to take this major decision.

Ensuring a beneficial use of A.I. and robotics is a challenge that calls for a intense democratic debate at all levels of the society.

As an Artificial Intelligence scientist and entrepreneur I believe that A.I. has a lot to bring to mankind, but we see that the risks of misuage are now extremely high – at the level of the huge potential benefits.

How will it be possible to stop them? Even if the good guys agree on a ban, the bad guy won't. Will people tolerate seeing young men killed in action if it is avoidable. This is not the same as WMD's, because it will be used against legitimate military targets in situations that would require human soldiers otherwise. We we really send women and men to their deaths when a robot can go?

This is really amazing post you have shared.

Please Wake up. A Giant Lioness Cat is already clearly out of the Bag. The bag shredded , no longer fits the cat. Robots both autonomous and lethal will become the norm for not only various Gov level entities and Corporations, but also at the hands of terror groups and blackmarket related organizations, and organizations yet to be born still in the womb. This AI based technology will possibly become even cybernetic in nature, copying nature in it's path, much like life itself. Eventually it will reproduce without our help. My point is that this is the natural accumulation of man's inventiveness, and the logical final act of our very existence may now be started. Can you perceive what sort of world will we become in 500 years given the array of technology that can be coupled with robotics, such as tissue implantation or biological virus defense systems aimed at us? Carbon based life form robots ? Imagine only one robot with the power of creative thinking . It is not inconceivable that robots may replace us, or blend with us, fight with us or even kill us, much like the theories of the eradication of Neanderthal at the hands of CroMagnon. This is not an easy Genie to put back in the bottle. All we can do is attempt to pass obscure laws stopping any and all AI and Robotic research temporarily , maybe raise some fickle public notion that we are doing something to monitor its growth, which will mean nothing in the end to quell the path of the age of Robotics. Such inventions as Virtual reality are close cousins . How do you decide? To prevent this social change , or to stop robotics from killing , or becoming alive is to change our own nature, we must change ourselves and halt all development in any form , else we continue the evolutionary path – which most would contend ends with us being on the lower level of the food chain.

Running away or cutting off the technologies or inventions is never the solution. Where there are bads, there are goods too.

Admiring time and energy you put into your website and detailed information you present. It’s great to come across a blog every once in a while that isn’t the same old rehashed information. Great work keeps it up.

attractive piece of work keep it up.

Wow, Amazing post thanks for sharing such wonderful post. I really like it again thanks so much

Nice post

Great post